Today on the MSDN TSQL forums I was asked about a performance problem and to me the problem seemed to be directly related to parameter sniffing. The poster then stated that he is not using stored procedures, so it cannot be a parameter sniffing . Truth be told there are a lot of misconceptions surrounding parameter sniffing. The best way to understand parameter sniffing is to understand why it happens.

Parameter sniffing occurs when a parameterized query uses cached cardinality estimates to make query plan decisions. The problem occurs when the first execution has atypical parameter values. For each subsequent execution the optimizer is going to assume the estimates are good even though the estimates may be way off. For example, say you have a stored procedure that returns all id values between 1 and 1000. If the stored procedure is executed with this large range of parameter values, the optimizer is going to cache these atypical values, which indirectly causes the optimizer to under estimate cardinality. The problem is a typical execution may only return a few rows. This “sniffing” can cause queries to scan a table oppose to seek because the optimizer is assuming inaccurate cardinality estimates. The easiest way to tell if this problem is occurring in your environment, is to look at the query plan XML. Inside the query plan XML, you will see something similar to the code snippet below:

<ColumnReference Column="@1" ParameterCompiledValue="(1000)" ParameterRuntimeValue="(10)" />

<ColumnReference Column="@0" ParameterCompiledValue="(1)" ParameterRuntimeValue="(1)" />

In the snippet above, the query plan is assuming that column@1 has a value of 1000 and column @0 has a value of 1, while the actual runtime values are 10 and 1 respectively.

There are three different methods to incorporate parameterization in SQL Server, auto/simple parameterization, stored procedures, and dynamic TSQL (executed with sp_executesql). One of the most common misconceptions I have seen surrounding parameter sniffing is thinking that it is limited to stored procedures. Now that we know more about parameter sniffing, lets have a look at an example. I will be using the AdventureWorks database for my example. In this example, I will select a few rows from the Sales.SalesOrderHeader table and then issue the same query, but return a lot more rows.

Code:

SELECT * FROM Sales.SalesOrderHeader WHERE CustomerID between 1 and 10

SELECT * FROM Sales.SalesOrderHeader WHERE CustomerID between 1 and 500

Query Plan:

As you can see, the query plan changes based on the number of rows returned. The reason being is in this case is the optimizer hit a tipping point where the cost of the key lookup is greater than an index scan. Let’s see what happens when a parameter sniffing problem occurs.

DBCC freeproccache

GO

EXEC sp_executesql N'SELECT * FROM Sales.SalesOrderHeader WHERE CustomerID between @Start and @End;',N'@Start INT,@End INT',@Start=1,@End=500

GO

EXEC sp_executesql N'SELECT * FROM Sales.SalesOrderHeader WHERE CustomerID between @Start and @End;',N'@Start INT,@End INT',@Start=1,@End=10

GO

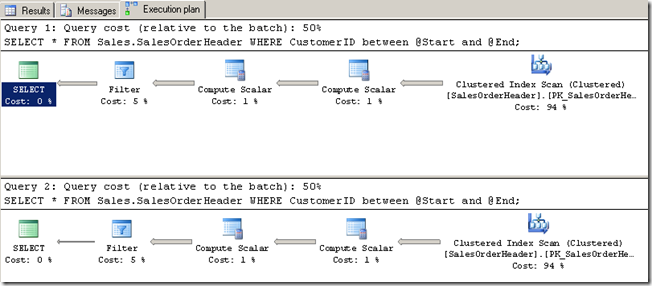

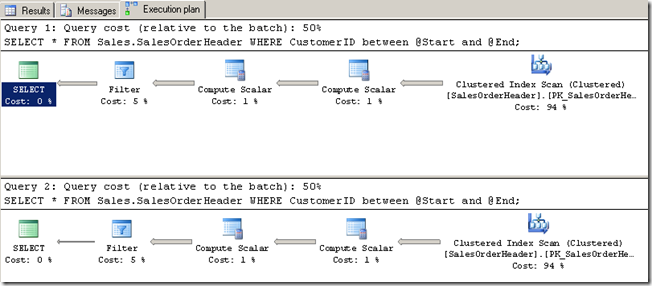

Query Plans:

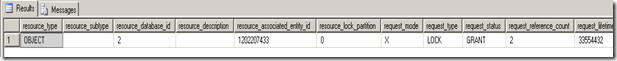

The execution plans are identical for both queries even though the number of rows greatly decreased. This is a parameter sniffing problem. This problem occurs because we executed and cached the atypical execution that is returning customerid values between 1 and 500. We can look into the execution plan and see the compiled parameter values and we can look at the execution plan estimated rows to validate.

<ColumnReference Column="@End" ParameterCompiledValue="(500)" ParameterRuntimeValue="(10)" />

<ColumnReference Column="@Start" ParameterCompiledValue="(1)" ParameterRuntimeValue="(1)" />

What can you do to solve the parameter sniffing problem? You have a few options that you can use to solve the parameter sniffing problem. You can use a local variables, this makes the optimizer use the density of the table to estimate cardinality, option recompile, or use the optimize for hint.

--Declare local variables

EXEC sp_executesql N'declare @dynStart INT,@dynEnd INT; SET @dynStart=@Start; SET @dynEnd=@End;SELECT * FROM Sales.SalesOrderHeader WHERE CustomerID between @dynStart and @dynEnd;',N'@Start INT,@End INT',@Start=1,@End=500

EXEC sp_executesql N'declare @dynStart INT,@dynEnd INT; SET @dynStart=@Start; SET @dynEnd=@End;SELECT * FROM Sales.SalesOrderHeader WHERE CustomerID between @dynStart and @dynEnd;',N'@Start INT,@End INT',@Start=1,@End=10

--Solution Using option(recompile)

EXEC sp_executesql N'SELECT * FROM Sales.SalesOrderHeader WHERE CustomerID between @Start and @End OPTION(RECOMPILE);',N'@Start INT,@End INT',@Start=1,@End=500

EXEC sp_executesql N'SELECT * FROM Sales.SalesOrderHeader WHERE CustomerID between @Start and @End OPTION(RECOMPILE);',N'@Start INT,@End INT',@Start=1,@End=10

--Solution Using OPTIMIZE FOR HINT

EXEC sp_executesql N'SELECT * FROM Sales.SalesOrderHeader WHERE CustomerID between @Start and @End OPTION(OPTIMIZE FOR (@Start=1,@End=10));',N'@Start INT,@End INT',@Start=1,@End=500

EXEC sp_executesql N'SELECT * FROM Sales.SalesOrderHeader WHERE CustomerID between @Start and @End OPTION(OPTIMIZE FOR (@Start=1,@End=10));',N'@Start INT,@End INT',@Start=1,@End=10

--Solution Using OPTIMIZE FOR UNKNOWN (SQL 2008 only)

EXEC sp_executesql N'SELECT * FROM Sales.SalesOrderHeader WHERE CustomerID between @Start and @End OPTION(OPTIMIZE FOR (@Start UNKNOWN,@End UNKNOWN));',N'@Start INT,@End INT',@Start=1,@End=500

EXEC sp_executesql N'SELECT * FROM Sales.SalesOrderHeader WHERE CustomerID between @Start and @End OPTION(OPTIMIZE FOR (@Start UNKNOWN,@End UNKNOWN));',N'@Start INT,@End INT',@Start=1,@End=10

Now each of the above methods will help alleviate some of the problems associated with parameter sniffing, but that does not mean it will give you an optimal query plan. You should test each of the methods to see which makes the most sense for your environment. If none of these options perform well, another option is to use control flow logic to execution different variations of the TSQL or stored procedure, allowing for more control over which execution plan gets used. The thing to remember here, is you have to cater to your customers and their usage patterns to ultimately decide which solution is best for your environment.

Until next time happy coding.