In a previous post, I demonstrated how to split a finite array of elements, using XML, http://jahaines.blogspot.com/2009/06/converting-delimited-string-of-values.html. The method presented in my previous post can only be used when there is a known number of elements. In this post, I will be focusing on the methods most commonly used today to parse an array, when the number of elements is unknown. This part one of a two part series where I look at the differing methods used to split an array of strings, using SQL Server 2005 and 2008. There are three primary methods to parse an array. The first method takes advantage of a numbers table to quickly parse the string. The second method uses the new XML functionality built into SQL Server 2005. The final method uses a TVF, without a permanent numbers table.

Let’s get started by creating are sample table.

SET NOCOUNT ON

GO

IF EXISTS(SELECT 1 FROM sys.tables WHERE NAME = 't')

BEGIN

DROP TABLE dbo.[t];

END

GO

CREATE TABLE dbo.t(

SomeId INT NOT NULL PRIMARY KEY,

SomeCode CHAR(2)

);

GO

;WITH

L0 AS (SELECT 1 AS C UNION ALL SELECT 1) --2 rows

,L1 AS (SELECT 1 AS C FROM L0 AS A, L0 AS B) --4 rows (2x2)

,L2 AS (SELECT 1 AS C FROM L1 AS A, L1 AS B) --16 rows (4x4)

,L3 AS (SELECT 1 AS C FROM L2 AS A, L2 AS B) --256 rows (16x16)

,L4 AS (SELECT 1 AS C FROM L3 AS A, L3 AS B) --65536 rows (256x256)

,L5 AS (SELECT 1 AS C FROM L4 AS A, L4 AS B) --4,294,967,296 rows (65536x65536)

,Nums AS (SELECT row_number() OVER (ORDER BY (SELECT 0)) AS N FROM L5)

INSERT dbo.t (SomeId,SomeCode)

SELECT

N,

CHAR(ABS(CHECKSUM(NEWID()))%26+65)

+ CHAR(ABS(CHECKSUM(NEWID()))%26+65) AS SomeCode

FROM Nums

WHERE N<=10000;

GO

CREATE NONCLUSTERED INDEX ncl_idx_SomeCode ON dbo.t(SomeCode);

GO

The first method I will demonstrate is the Numbers table method. This is probably the most efficient method of all the methods listed, but performance does vary among environments. Another great benefit of this method is that it works with SQL Server 2000 and greater.

The first step in using this method is to create a table of numbers. A table is just what it sounds like… a table of natural number starting from 1 and going to n, where is the maximum number you want in the table. This method really performs well because of a clustered index on the number column, which allows for very fast index seeks. Here is the code I use to generate my numbers table.

--=============================================================================

-- Setup

--=============================================================================

USE [tempdb]

GO

SET NOCOUNT ON

GO

--=============================================================================

-- Create and populate a Numbers table

--=============================================================================

--===== Conditionally drop

IF OBJECT_ID('dbo.Numbers') IS NOT NULL

BEGIN

DROP TABLE dbo.Numbers;

END

GO

CREATE TABLE dbo.[Numbers](

N INT NOT NULL

);

GO

;WITH

L0 AS (SELECT 1 AS C UNION ALL SELECT 1) --2 rows

,L1 AS (SELECT 1 AS C FROM L0 AS A, L0 AS B) --4 rows (2x2)

,L2 AS (SELECT 1 AS C FROM L1 AS A, L1 AS B) --16 rows (4x4)

,L3 AS (SELECT 1 AS C FROM L2 AS A, L2 AS B) --256 rows (16x16)

,L4 AS (SELECT 1 AS C FROM L3 AS A, L3 AS B) --65536 rows (256x256)

,L5 AS (SELECT 1 AS C FROM L4 AS A, L4 AS B) --4,294,967,296 rows (65536x65536)

,Nums AS (SELECT row_number() OVER (ORDER BY (SELECT 0)) AS N FROM L5)

INSERT Numbers

SELECT N FROM Nums

WHERE N<=10000;

GO

ALTER TABLE dbo.Numbers ADD CONSTRAINT PK_N

PRIMARY KEY CLUSTERED ([N])WITH(FILLFACTOR = 100);

GO

Next, we will need to create an Inline TVF (Table Valued Function) to split the array. This function is written by SQL Server guru Itzik Ben-Gan. This split function is very fast and very scalable.

IF OBJECT_ID('dbo.fn_split') IS NOT NULL

DROP FUNCTION dbo.fn_split;

GO

CREATE FUNCTION dbo.fn_split(@arr AS NVARCHAR(2000), @sep AS NCHAR(1))

RETURNS TABLE

AS

RETURN

SELECT

(n - 1) - LEN(REPLACE(LEFT(@arr, n-1), @sep, N'')) + 1 AS pos,

SUBSTRING(@arr, n, CHARINDEX(@sep, @arr + @sep, n) - n) AS element

FROM dbo.Numbers

WHERE n <= LEN(@arr) + 1

AND SUBSTRING(@sep + @arr, n, 1) = @sep;

GO

This function’s logic is pretty straight forward, but I will discuss how it works. The numbers table is used to iterate through each character in the array. As you can see the first step is to pad the beginning of the string with the delimiter. With all the delimiters in place, the code can determine the position of each delimiter. Once the logic has the delimiter’s position, the code logics utilizes the CHARINDEX() and SUBSTRING() system functions to extract each element and it’s corresponding position.

Now that we know how this code works, lets see it in action.

DECLARE @Ids VARCHAR(1000)

SET @Ids = '1,500,5439,9999,7453'

SELECT t.*

FROM dbo.t

INNER JOIN dbo.fn_split(@Ids,',') AS fn

ON t.SomeId = fn.Element

/*

SomeId SomeCode

----------- --------

1 SQ

500 BO

5439 ZV

9999 RD

7453 IG

*/

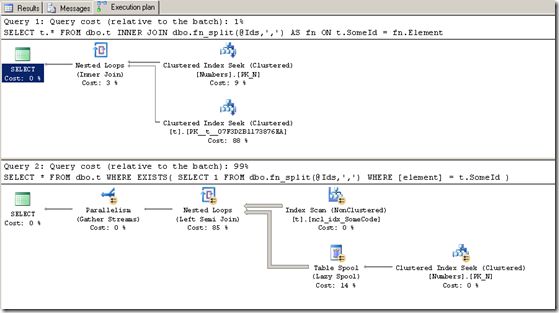

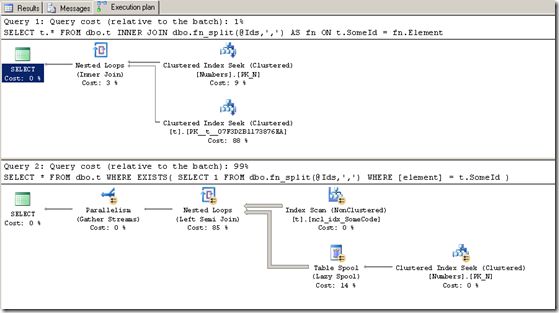

Before we move onto the next method, I would like to point out that some developers love to use the exists clause for this situation, especially when the developer does not need any columns from the TVF; however, exists may degrade performance. I am planning to do an in-depth post regarding the differences between inner join and EXISTS. To give you an idea of the how exists can degrade performance, have a look at the screenshot below. Please note that performance is not always black or white and all executions plans will not deviate as much as the one below.

Execution Plan:

IO Stats:

********************* inner join *************************

Table 't'. Scan count 0, logical reads 14, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Table 'Numbers'. Scan count 1, logical reads 3, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

********************* exists *************************

Table 'Worktable'. Scan count 2, logical reads 20018, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Table 'Numbers'. Scan count 2, logical reads 6, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Table 't'. Scan count 3, logical reads 46, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

As you can see, the difference between the inner join and the exist query plans are night and day. I will post on this at a later date, but I wanted to make you aware of possible performance problems.

The next method I will be discussing is the XML nodes method. This method does not require an ancillary table, but does require SQL Server 2005 and greater. This method does handle XML special characters. I picked up this encoding/decoding method from SQL Server enthusiast Brad Schulz, http://bradsruminations.blogspot.com/.

DECLARE @Ids VARCHAR(1000)

SET @Ids = '1,500,5439,9999,7453'

SELECT t.*

FROM dbo.t

INNER JOIN(

SELECT x.i.value('.','INT') AS SomeId

FROM(SELECT XMLEncoded=(SELECT @Ids AS [*] FOR XML PATH(''))) AS EncodeXML

CROSS APPLY (SELECT NewXML=CAST('<i>'+REPLACE(XMLEncoded,',','</i><i>')+'</i>' AS XML)) CastXML

CROSS APPLY NewXML.nodes('/i') x(i)

) AS Ids

ON Ids.SomeId = T.SomeId

The XML method looks a lot more complex than it really is. Essentially, what I am doing is creating an XML structure that contains each of the elements of the array. For example, the array “1,500,5439,9999,7453” becomes “<i>1</i><i>500</i><i>5439</i><i>9999</i><i>7453</i>.” The first step is to encode the array by using FOR XML PATH. Once the array is in an encoded string format, I explicitly cast the xml string into an XML data type. Once I have the XML in a decoding XML format, I use the XML nodes method to put the XML values into a relational format. For more information about how this method works, you can view the following blog post by Brad Schulz, http://bradsruminations.blogspot.com/2009/10/un-making-list-or-shredding-of-evidence.html. This post does a great job of breaking down the inner workings of this method.

The final method I will be demonstrating uses a TVF function with a virtual table of numbers. The TVF method does not require an ancillary table because a numbers table is generated on the fly.

IF OBJECT_ID('dbo.fn_TVF_Split') IS NOT NULL

DROP FUNCTION dbo.fn_TVF_Split;

GO

CREATE FUNCTION dbo.fn_TVF_Split(@arr AS NVARCHAR(2000), @sep AS NCHAR(1))

RETURNS TABLE

AS

RETURN

WITH

L0 AS (SELECT 1 AS C UNION ALL SELECT 1) --2 rows

,L1 AS (SELECT 1 AS C FROM L0 AS A, L0 AS B) --4 rows (2x2)

,L2 AS (SELECT 1 AS C FROM L1 AS A, L1 AS B) --16 rows (4x4)

,L3 AS (SELECT 1 AS C FROM L2 AS A, L2 AS B) --256 rows (16x16)

,L4 AS (SELECT 1 AS C FROM L3 AS A, L3 AS B) --65536 rows (256x256)

,L5 AS (SELECT 1 AS C FROM L4 AS A, L4 AS B) --4,294,967,296 rows (65536x65536)

,Nums AS (SELECT row_number() OVER (ORDER BY (SELECT 0)) AS N FROM L5)

SELECT

(n - 1) - LEN(REPLACE(LEFT(@arr, n-1), @sep, N'')) + 1 AS pos,

SUBSTRING(@arr, n, CHARINDEX(@sep, @arr + @sep, n) - n) AS element

FROM Nums

WHERE

n <= LEN(@arr) + 1

AND SUBSTRING(@sep + @arr, n, 1) = @sep

AND N<=1000

GO

Now that the function is in place, you can do the same join as before.

DECLARE @Ids VARCHAR(1000)

SET @Ids = '1,500,5439,9999,7453'

SELECT t.*

FROM dbo.t

INNER JOIN dbo.fn_TVF_split(@Ids,',')

ON t.SomeId = Element

This method is really the same method as provided before, except it does not take advantage of a permanent numbers table.

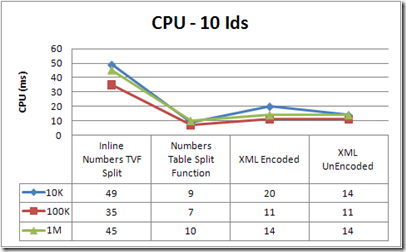

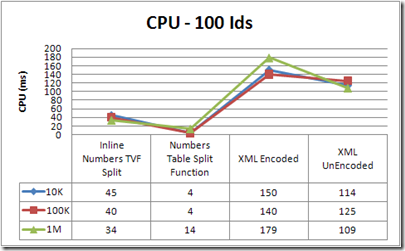

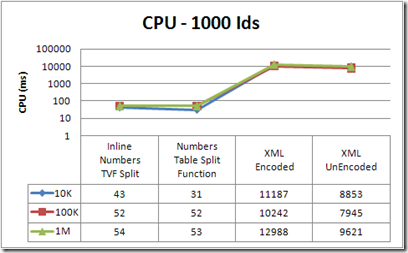

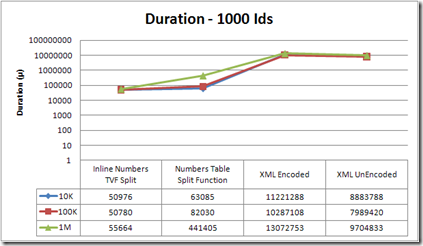

I have shown the three most common and best performing methods for splitting a delimited string. Which method do you use? As you can imagine, this answer depends on the distribution of data, size of tables, indexes etc.. One method is not always going to be better than another method, so my recommendation is to test each method and choose the one that makes the most sense for you environment. In part two of this series, I am really going to dig into how each of these methods performs on varying sized tables and strings. This should give you a better idea of which method to choose based on your data, but as stated before the results may vary depending on several environmental factors.

Until next time, happy coding.