I have seen a lot queries written in forums and from differing developers over the past few years and have noticed an increasing trend in “sloppy” code. Most of the “sloppy’” code that I am referring to has either an invalid search argument, or a function in the predicate. It seems developers these days are in such a rush to get code out they forget the principal mechanics of a properly tunned query. A lot of times a developer will put whatever in the predicate without knowing the performance implications. So how exactly do these type of queries affect performance? The short answer is the optimizer typically has to use an index/table scan to satisfy the query because the filters are not adequate to seek the row. In this post, I will be primarily focusing on invalid search arguments (non SARGable queries).

We will start of by defining what valid search arguments are. Essentially you should have an inclusive operator to seek an index. So what the heck is an inclusive operator? Inclusive operators are =, BETWEEN, >, >=, <=, <, and like (begins with only). Now that we have our valid search arguments, we know that our query predicate should include one of these operators; otherwise, an index seek will be unlikely. You will notice that the list of available operators does not include: IS NULL, IS NOT NULL, <>, and like (contains). Does this mean that these operators are incapable of using a seek? No, it does not. It should be known that the optimizer may not be able to generate accurate cardinality estimates for the “not equal to” operator. What I want to dig into is the implications of using invalid search arguments and I will be focus on the “<>” operator.

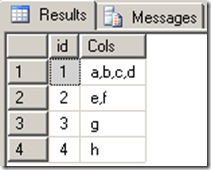

Let’s start by creating and populating a sample table.

USE [tempdb]

GO

IF OBJECT_ID('dbo.TestData') IS NOT NULL

BEGIN

DROP TABLE dbo.[TestData];

END

GO

SELECT TOP 100000

RowNum = IDENTITY(INT,1,1),

SomeID = ABS(CHECKSUM(NEWID()))%2500+1,

SomeCode = CHAR(ABS(CHECKSUM(NEWID()))%26+65)

+ CHAR(ABS(CHECKSUM(NEWID()))%26+65)

INTO dbo.TestData

FROM Master.dbo.SysColumns t1,

Master.dbo.SysColumns t2

GO

ALTER TABLE dbo.TestData

ADD PRIMARY KEY CLUSTERED (RowNum);

GO

CREATE INDEX IXC_TestData_Cover1 ON dbo.TestData(SomeId) ;

GO

CREATE INDEX IXC_TestData_Cover2 ON dbo.TestData(somecode) ;

GO

Now that we have our table, lets start by running a simple query using “not equal to.”

DBCC DROPCLEANBUFFERS

DBCC FREEPROCCACHE

SELECT

rownum,

someid,

[SomeCode]

FROM dbo.[TestData] t1

WHERE

t1.[SomeId] <> 0

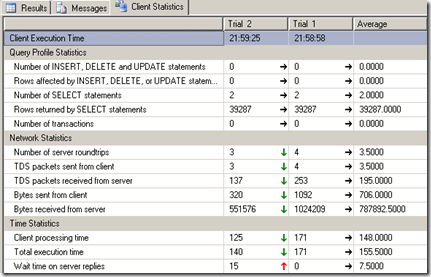

Here is the query plan and the IO/TIME stats

SQL Server parse and compile time:

CPU time = 0 ms, elapsed time = 0 ms.

Table 'TestData'. Scan count 1, logical reads 238, physical reads 1, read-ahead reads 146, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

SQL Server Execution Times:

CPU time = 47 ms, elapsed time = 1477 ms.

SQL Server Execution Times:

CPU time = 0 ms, elapsed time = 0 ms.

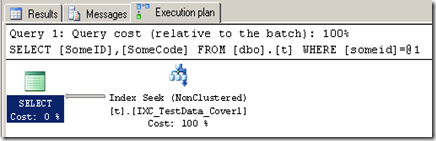

As you can see, the query performed like we thought it would by scanning the index to satisfy the query, but what happens when we cover the query with our index? The answer may be quite surprising. To test this we need to reduce the number of columns in the select list.

Here is the new query: Note: we can leave rownum in the select list because this column is part of the clustering key, which is included in non clustered indexes.

SET NOCOUNT ON;

GO

DBCC DROPCLEANBUFFERS

DBCC FREEPROCCACHE

SET STATISTICS IO ON;

SET STATISTICS TIME ON;

SELECT

rownum,

someId

FROM dbo.[TestData] t1

WHERE

t1.[SomeId] <> 0

SET STATISTICS IO OFF;

SET STATISTICS TIME OFF;

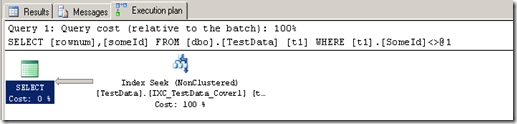

Here is the query plan and the IO/TIME stats

SQL Server parse and compile time: CPU time = 0 ms, elapsed time = 0 ms. Table 'TestData'. Scan count 2, logical reads 179, physical reads 1, read-ahead reads 153, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

SQL Server Execution Times: CPU time = 94 ms, elapsed time = 1207 ms.

SQL Server Execution Times: CPU time = 0 ms, elapsed time = 0 ms.

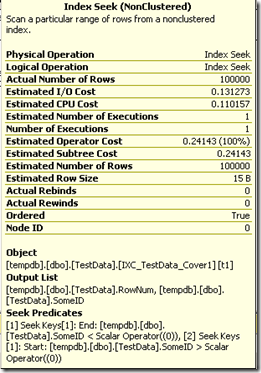

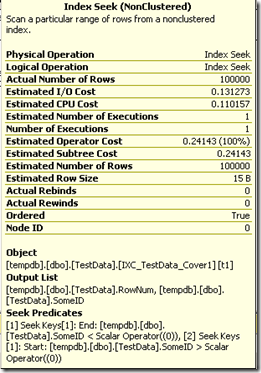

The results are pretty interesting. We actually get an index seek because of the covering index, but the IO and CPU Time actually increased. You are probably screaming at the screen saying,” HOLD ON BUDDY YOU JUST SAID THAT <> IS NOT A VALID SEARCH ARGUMENT!!!” and you would be right. The answer is actually quite simple…. the “not equal to” operator did NOT seek the row. If you mouse over the index seek show plan operator you will see that the seek predicate actually gets transformed into a > and <. So this is where myself and other SQL Server enthusiasts debate . This begs the question that if the optimizer can change the “not equal to” operator into a ranged search… does this make “not equal to” a valid search argument? I leave this up to you to decide, I however say no. In my opinion, the < and > are valid search operators, not the "<>". I cannot find any documentation on how/when the predicate transformation actually occurs. The main question I have is, can we definitively say that the optimizer will ALWAYS transform the predicate. If the answer is yes, then we can say it is a valid search argument, but until then I have to say no. I will most definitely agree that the optimizer is usually pretty smart in using the index to search inequalities. Here is a screenshot of the index show plan operator.

Now let’s do what I like to do and rewrite inequalities to be valid SARG.

SET NOCOUNT ON;

GO

DBCC DROPCLEANBUFFERS

DBCC FREEPROCCACHE

SET STATISTICS IO ON;

SET STATISTICS TIME ON;

SELECT

rownum,

someId

FROM dbo.[TestData] t1

WHERE

t1.[SomeId] < 0 OR t1.[SomeID] > 0

SET STATISTICS IO OFF;

SET STATISTICS TIME OFF;

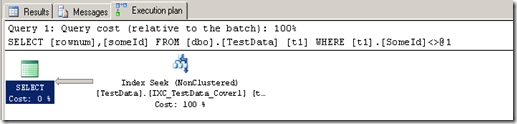

The query plan and IO/Time Stats:

SQL Server parse and compile time: CPU time = 0 ms, elapsed time = 0 ms. Table 'TestData'. Scan count 2, logical reads 179, physical reads 1, read-ahead reads 153, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

SQL Server Execution Times: CPU time = 94 ms, elapsed time = 1207 ms.

SQL Server Execution Times: CPU time = 0 ms, elapsed time = 0 ms.

So what’s the verdict? Well in this case the optimizer choose the exact same plan with the exact same IO/CPU time. So where does that leave us? I invite each of you to decide for yourselves, but my opinion is to stick with best practices and with valid search arguments. While these queries are theoretically the same, I believe a predicate should be as SARG friendly as possible. In doing so the developer can ensure the optimizer is able to make the best decision possible. The questions on the table are, How consistent is the optimizer in transforming the query, especially if the optimizer cannot accurately measure cardinality and are valid search arguments still relevant? I cant answer the first question now, perhaps I can come up with an example, but this is a question maybe more appropriate for the query optimization team. I answer yes to SARG relevance. Valid search arguments give the optimizer better cardinality estimates and is a more inclusive search, which usually translates into better and more consistent performance. With that said, the optimizer is able to handle many invalid search argument variants and successfully transform those queries into something useful, but do we really want to rely on the optimizer to “automagically” fix our code?

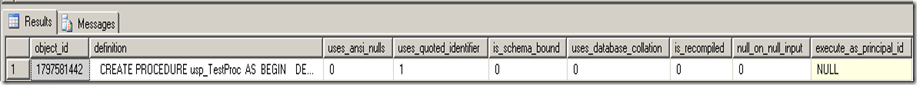

Links: