If you have been following this series, I have been focusing on many different aspects of dynamic pivoting using SQL 2005. In the first part of this series,http://jahaines.blogspot.com/2009/08/pivoting-data-using-sql-2005-part-1.html, I focused on creating a static pivot query. In the second part of this series, http://jahaines.blogspot.com/2009/08/pivoting-data-using-sql-2005-part-2.html,I focused on creating a dynamic pivot, where the pivot values were unknown. We are now going to ramp up this series with a completely dynamic pivot. In this series, will will pivot data where the columns are stored as rows in a table and the values are not known.

In this example, we will be looking at employee sales. Let’s start by creating and populating our sample tables.

SET NOCOUNT ON

GO

IF OBJECT_ID('tempdb..#Pivot_Columns') IS NOT NULL

BEGIN

DROP TABLE #Pivot_Columns;

END

GO

CREATE TABLE #Pivot_Columns(

ColId INT PRIMARY KEY,

TableName VARCHAR(25),

ColName VARCHAR(25)

);

GO

INSERT INTO #Pivot_Columns VALUES (1,'#Sales','YearMonth');

INSERT INTO #Pivot_Columns VALUES (2,'#Sales','SalesAmt');

IF OBJECT_ID('tempdb..#EmpSales') IS NOT NULL

BEGIN

DROP TABLE #EmpSales;

END

GO

CREATE TABLE #EmpSales(

EmpSalesId INT IDENTITY(1,1) PRIMARY KEY,

EmpId INT,

SalesId INT,

ColId INT,

ColTxt VARCHAR(50)

);

GO

INSERT INTO #EmpSales VALUES (1,1,1,'2008-08');

INSERT INTO #EmpSales VALUES (1,1,2,'10000');

INSERT INTO #EmpSales VALUES (2,3,1,'2008-07');

INSERT INTO #EmpSales VALUES (2,3,2,'8500.25');

INSERT INTO #EmpSales VALUES (3,4,1,'2008-08');

INSERT INTO #EmpSales VALUES (3,4,2,'5999.99');

Now we have our table, let’s have a look at an example.

DECLARE @sql NVARCHAR(MAX),

@Pivot_Cols VARCHAR(MAX)

--The code below reads through all the data in the table and

--builds a distinct column list.

SELECT

@Pivot_Cols = COALESCE(@Pivot_Cols + ',','') + QUOTENAME(ColName)

FROM #Pivot_Columns

GROUP BY QUOTENAME([ColName])

ORDER BY QUOTENAME(ColName) ASC

SELECT @sql = N'

SELECT

EmpId,' + @Pivot_Cols + '

FROM(

SELECT e.EmpId, e.ColTxt,pc.ColName

FROM #EmpSales e

INNER JOIN #Pivot_Columns pc ON e.ColId = pc.ColId

) AS dataToPivotTable

PIVOT(

MAX(ColTxt) --Aggregate Function

FOR [ColName]-- Column To Pivot

IN (' + @Pivot_Cols + ') --Values to Pivot

) AS whatToPivotOnTable;'

--PRINT @sql

EXEC sp_executesql @sql

GO

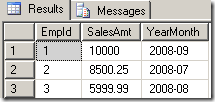

Results:

As you can see from the screenshot above, we have successfully pivoting the rows of data into columns. Is this all that there is to dynamic pivoting?…..not quite. If you look closely at the example above you will notice that we are using the MAX aggregate to get the maximum of each column. This is fine if you expect a single set of rows, but what do you think will happen when an employee has more than one set of rows? The short answer is the data would be rendered invalid. The data may become invalid because the maximum YearMonth value, may be from a different month than the maximum SalesAmt value. To demonstrate this behavior, we will add a new set of rows into our table.

INSERT INTO #EmpSales VALUES (1,2,1,'2008-09');

INSERT INTO #EmpSales VALUES (1,2,2,'1');

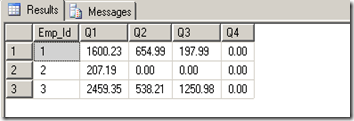

Now execute the same query and your results should look like the screenshot below. The sales amount column has a maximum value of 10,000 and the YearMonth is 2008-09. If you look carefully at the data we inserted, the SalesAmt value should be 1, for 2008-09, not 10,000.

So how do we resolve this issue? Let’s press on. We are going to modify our query a bit.

DECLARE @sql NVARCHAR(MAX),

@Pivot_Cols VARCHAR(MAX)

--The code below reads through all the data in the table and

--builds a distinct column list.

SELECT

@Pivot_Cols = COALESCE(@Pivot_Cols + ',','') + QUOTENAME(e.ColTxt)

FROM #EmpSales e

INNER JOIN [#Pivot_Columns] pc

ON e.[ColId] = pc.[ColId]

WHERE pc.[ColName] = 'YearMonth'

GROUP BY QUOTENAME(e.ColTxt)

ORDER BY QUOTENAME(e.ColTxt)

SELECT @sql = N'

SELECT

EmpId,' + @Pivot_Cols + '

FROM(

SELECT

e.EmpId,

e.ColTxt as MonthYear,

CONVERT(NUMERIC(9,2),e2.[ColTxt]) AS [ColTxt]

FROM #EmpSales e INNER JOIN #EmpSales e2 ON e.EmpId = e2.EmpId AND e.SalesId = e2.SalesId

INNER JOIN #Pivot_Columns pc ON pc.ColID = e.[ColId]

INNER JOIN #Pivot_Columns pc2 ON pc2.ColId = e2.[ColId]

WHERE pc.ColName = ''YearMonth'' AND pc2.ColName = ''SalesAmt''

) AS dataToPivotTable

PIVOT(

SUM([ColTxt]) --Aggregate Function

FOR [MonthYear]-- Column To Pivot

IN (' + @Pivot_Cols + ') --Values to Pivot

) AS whatToPivotOnTable;'

PRINT @sql

EXEC sp_executesql @sql

GO

The code is very similar in most respects, but the biggest difference is I am not pivoting on ColName. Instead, I am going back to the methods we learned in part 2 of this series. The key to dynamically pivoting data is to know your data. Depending on what you are trying to pivot, you may need to aggregate data or simply use the MAX pivot. In this case, we need to use a more powerful pivot statement. In the variable assignment portion of the modifed code, I am strictly querying #EmpSales table, making sure to filter for column “YearMonth.” Within the Pivot code, I select the needed data by using a self referencing join. We need a self join because our next step is to create a row of data that contains the SalesAmt for each EmpId’s salesId. Once we have this information we can use the pivot operator to perform the aggregation.

Results:

How about them apples? As you can see, the new pivot reflects the correct totals for each month. We can also create a version of this code that works with prior versions of SQL Server.

DECLARE @Pivot_Cols VARCHAR(MAX)

DECLARE @sql NVARCHAR(MAX)

SELECT

@Pivot_Cols = COALESCE(@Pivot_Cols + ',','')

+ N'SUM(CONVERT(NUMERIC(9,2),CASE WHEN pc.[ColName] = ''YearMonth'' AND e.ColTxt ='

+ QUOTENAME(e.ColTxt,'''') + ' THEN ' + ' e2.[ColTxt] ELSE NULL END)) AS '

+ QUOTENAME(e.ColTxt,'''') + CHAR(13)

FROM #EmpSales e

WHERE e.ColId = 1

GROUP BY e.ColTxt

ORDER BY e.ColTxt

SET @sql = N'SELECT e.EmpId,' + CHAR(13)

+ @Pivot_Cols + CHAR(13)

+ 'FROM #EmpSales e INNER JOIN #EmpSales e2 ON e.EmpId = e2.EmpId AND e.SalesId = e2.SalesId' + CHAR(13)

+ 'INNER JOIN #Pivot_Columns pc ON pc.ColID = e.[ColId]' + CHAR(13)

+ 'INNER JOIN #Pivot_Columns pc2 ON pc2.ColId = e2.[ColId]' + CHAR(13)

+ 'WHERE pc.ColName = ''YearMonth'' AND pc2.ColName = ''SalesAmt''' + CHAR(13)

+ 'GROUP BY e.[EmpId]'

PRINT @sql

EXEC sp_executesql @sql

So there you have it. A completely dynamic method to pivot data. So what have we learned over these last few posts………….. well I hope that you have learned something :-). I hope that you learned pivoting data in SQL Server can be very simple. Pivoting data can sometimes become a little hairy, but is still a powerful and somewhat simplistic solution to implement. <step on soapbox> Hopefully, Microsoft will give us a pivot operator that is somewhat flexible and dynamic. A native dynamic pivot operator allows for more scalable code and reduced SQL injection risk.; however, I really doubt that we will get any dynamic pivot operator, in the near future</step off soapbox>. Anyway, I hope that you have enjoyed reading this series and that you can use this in your environment.

Until next time… happy coding.