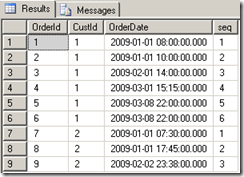

For those of you who may be wondering what the heck an UPSERT is, an UPSERT is an UPDATE and INSERT into a given table. Typically, an UPSERT operation requires two separate transactions. Usually, the first transaction issued is the UPDATE and then the INSERT is issued shortly after. In this post I will be extending the package I created, in my previous post, http://jahaines.blogspot.com/2009/09/ssis-only-inserting-rows-that-do-not.html. Once your SSIS package resembles the package in the link, you can proceed with the next step. If you want to skip the prior post, you can download the package at the bottom on this post.

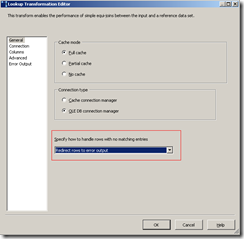

The first item of interest is the Lookup transformation. First I will drag an OLE DB Command onto the canvas and connect it to the Lookup transformation. A dialog box should appear. When the dialog box appears choose the Lookup Match Output, in the Output dropdown list. An OLE DB Command, is a parameterized TSQL statement that can issued against a given data set. Before I start configuring the transformation, I would like to point out that this method is extremely easy to setup, but has a major drawback. The OLE DB Command has to be issued for each row returned by the Input, so this type of process works recursively or iteratively. I will demonstrate another method later in this post. With that out of the way, I will start configuring the transformation. First, I will build an UPDATE command using parameters for each column and the predicate.

My canvas currently looks like the screenshot below.

The next step is to configure the OLE DB Command transformation. Double-click the OLE DB Command transformation. In the Connection Manager dropdown list, choose the database where you created dbo.SSIS_Import. Click the “Component Properties” tab. Within the “Component Properties” tab, you will need to click the ellipses next to the “SQL Command” property. Paste the code presented below into the box and click ok.

UPDATE dbo.SSIS_Import

SET

SomeInt = ?,

SomeChar = ?

FROM dbo.SSIS_Import import

WHERE SomeId = ?

The code above is a pretty simplistic UPDATE statement. The key thing to note is the “?”. Each of the ‘?” will be given a parameter value in SSIS. The parameters are dynamically named in the order they appear in the command. Next, I will be mapping these parameters to my input columns. Click the “Column Mappings” tab. Align the input columns to each parameter. My column mapping is shown below. Once complete, click “Ok.”

That it!!! We have successfully created an UPSERT operation using SSIS… but wait what is the catch? The catch is the UPDATE will operate like a cursor processing one UPDATE command at a time, which can be a nightmare from a performance standpoint. You may be wondering how we can make this process set based. There is not much you can do in SSIS alone, so I will need to find other means. The best way to make this process more scalable is to leverage SQL Server and SSIS.

The first step is to drop the OLE DB command I just created. I will then drag a OLE DB Destination to the canvas and connect it to the Lookup Match Output. My canvas looks like below.

Next, I will switch gears and write some TSQL code. I will need to open SSMS and connect to the database where dbo.SSIS_Import exists. Firstly, I have to create a view. I will not use the view to select data, but will use the view as a intermediate object to insert data.

CREATE VIEW vw_SSIS_Import

AS

SELECT [SomeId],[SomeInt],[SomeChar]

FROM dbo.[SSIS_Import]

GO

Now that my view is created, I am going to create an INSTEAD of trigger, on my view. The instead of trigger will allow me to use my OLE DB Destination to bulk insert from SSIS. The bulk insert from SSIS gives me a mechanism to pass the rows for UPDATE, as a set of data. Once I have the rows in a set, the trigger can efficiently UPDATE the data.

CREATE TRIGGER trg_UPdate_SSIS_Import

ON dbo.vw_SSIS_Import

INSTEAD OF INSERT

AS

BEGIN

UPDATE import

SET

SomeInt = i.SomeInt,

SomeChar = i.SomeChar

FROM dbo.SSIS_Import import

INNER JOIN inserted i

ON i.SomeId = import.SomeId

END

GO

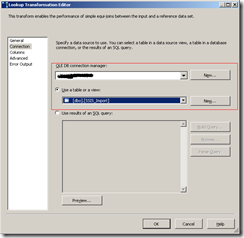

In the code above, I am using efficient TSQL to update all rows that are inserted into the view, from the SSIS package. I am now ready to configure the OLE DB Destination. Double-click the OLE DB Destination to launch the configure dialog box. Make sure your package is using the right connection manager and choose the view. Click the “Column Mappings” tab and then click '”Ok.” Right-click the OLE DB Destination and choose properties. In the “Fast Load Options”, type “FIRE_TRIGGERS.” This allows the SSIS insert to fire the INSTEAD OF trigger, on the view. That’s it we are done!!! The view/INSTEAD OF TRIGGER method is extremely easy to configure and implement; however, it does require that additional database objects to be created.

There you have it. I have demonstrated two methods to UPSERT data, using SSIS. The first method relies on the OLE DB Command, which has the limitation of having to run for each and every row. The latter option is a more scalable solution that requires the creation of a view and trigger. Both methods have pros and cons and I leave it to you to determine which is best for your environment.

Until next time, Happy Coding.

PS: If you want to do all of the work in SQL, you can allow the trigger to perform the UPDATE and INSERT. This method reduces the complexity of the SSIS package because you only need a single source and destination, with no Lookup.

Download SSIS Package:

Note: The package was created in BIDS 2008, which is not compatible with BIDS 2005.