I came across an interesting thread in the MVP newsgroups a few weeks back and thought I would share it’s content here. The thread was about providing a reliable method to load a table, while keeping the downtime to a minimum. As an added bonus the users still want to be able to query the old data, while you are loading the new data. I would like to point out that I did not come up with this solution. I got this solution from Aaron Bertrand, http://sqlblog.com/blogs/aaron_bertrand/. His solution is absolutely fantastic. Aaron's solution is by far the best way to attack this problem, in my opinion.

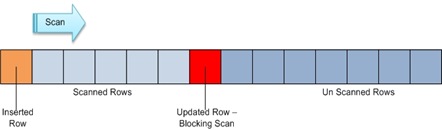

Instinctively, the first solution that comes to most of our minds is an insert into/select statement, with locking hints. This solution is not a scalable one and does not adhere to our business requirements. I will start by creating a sample table and then we can dig into how to solve this problem.

USE [tempdb]

GO

IF EXISTS(SELECT 1 FROM sys.tables WHERE name = 'TestData')

BEGIN

DROP TABLE [dbo].[TestData];

END

GO

CREATE TABLE [dbo].[TestData](

RowNum INT PRIMARY KEY,

SomeCode CHAR(2)

);

GO

INSERT INTO [dbo].[TestData] (RowNum,SomeCode)

SELECT TOP 10000

ROW_NUMBER() OVER (ORDER BY t1.NAME) AS RowNumber,

CHAR(ABS(CHECKSUM(NEWID()))%26+65)

+ CHAR(ABS(CHECKSUM(NEWID()))%26+65) AS SomeCode

FROM

[Master].[dbo].[SysColumns] t1,

[Master].[dbo].[SysColumns] t2

GO

Okay with our table out of the way, we can start to really think about how to solve this problem. The first step in solving this problem is to create two new schemas. The first schema is called “Holder” and this schema will be a holder or container, for our table that we will be loading with new data.

--create Holder schema

CREATE SCHEMA [Holder];

GO

The Holder schema does just what the name implies… it holds the table that I will be inserting into. The next step is to create a table that matches the same definitions, as the one above, but in the Holder schema.

--Create TestData table in the Holder schema

CREATE TABLE [Holder].[TestData](

RowNum INT PRIMARY KEY,

SomeCode CHAR(2)

);

GO

With our schema and table created, I only have one other schema to create. The last schema is the Switch schema. The Switch schema is used as an intermediary schema to house the current source table (dbo.TestData) while the loaded table in the Holder schema (Holder.TestData) is transferred to the dbo schema (dbo.TestData).

--Create Switch schema

CREATE SCHEMA [Switch];

GO

This solution adheres to all of our business rules and reduces downtime to the amount of time required to perform a schema metadata operation, which is nearly instantaneous. This is a very scalable solution because the loading of the data is completely transparent to the users, all-the-while allowing them to query the stale data. Let’s have a look at the final solution:

--Create procedure to load the table

CREATE PROCEDURE [dbo].[usp_LoadTestData]

AS

BEGIN

SET NOCOUNT ON;

--Truncate holder table

TRUNCATE TABLE [Holder].[TestData];

--load new data into holder table

INSERT INTO [Holder].[TestData] (RowNum,SomeCode)

SELECT TOP 500

ROW_NUMBER() OVER (ORDER BY t1.NAME) AS RowNumber,

CHAR(ABS(CHECKSUM(NEWID()))%26+65)

+ CHAR(ABS(CHECKSUM(NEWID()))%26+65) AS SomeCode

FROM

[Master].[dbo].[SysColumns] t1,

[Master].[dbo].[SysColumns] t2

BEGIN TRANSACTION

-- move "live" table into the switch schema

ALTER SCHEMA [Switch] TRANSFER [dbo].[TestData];

-- move holder populated table into the "live" or dbo schema

ALTER SCHEMA [dbo] TRANSFER [Holder].[TestData];

-- Move the prior table to the holder schema

ALTER SCHEMA [Holder] TRANSFER [Switch].[TestData];

COMMIT TRANSACTION

END

GO

Let’s see how it works. Execute the code and then query the dbo.TestData table. You will see that the table now contains 500 rows instead of the 10000 I started with.

EXEC dbo.[usp_LoadTestData];

SELECT * FROM dbo.TestData;

Note: If you are interested in seeing how the solution handles locks, you can strip all the code out of the stored procedure and run everything but the commit transaction. You can then open another window and try to query the table, which will result in a wait until the schema transfer is committed.

That’s it!!! This is by far the best method I have seen to solve this business problem. It is very scalable, has very little downtime, and is not affected by the NOLOCK hint. I am really happy and thankful that Aaron shared his solution. This solution has given me a lot of insight to solving this problem and similar problems. Hopefully this post will have the same effect on you.

Until next time, happy coding.