This is part two of a two part series. In part 1 of this series I demonstrated the most popular methods used to parse/split a delimited string of values, http://jahaines.blogspot.com/2009/11/splitting-delimited-string-part-1.html. In this article I will be focusing on the performance implications of each method presented. I will start of by giving a disclaimer that your results may vary from the results presented in this article. Although the numbers may differ, the data trend should be somewhat consistent with my results. I will be tracking three key performance counters: CPU, Duration, and Reads against varying string sizes and data loads. In addition to a varying delimited strings length and load, I have performed each query 10 times and taken an average. I did this to ensure that I get the most accurate results. Enough talk let’s dive into our first test.

Note: I am not going to walk through how I did each test, but I will link all my scripts at the bottom of this post

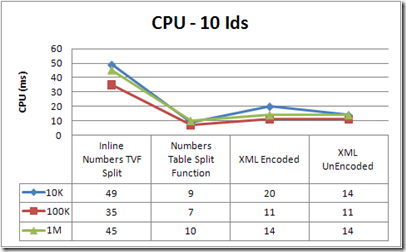

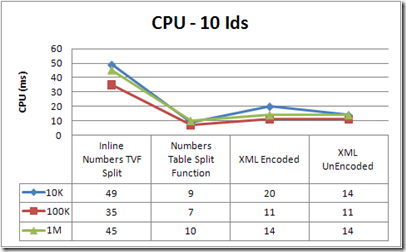

The first test is testing how CPU usage differs between methods. I tested a delimited string of exactly 10 Ids over a table with 10,000 rows, 100,000 rows and 1,000,000 rows. As you can see, the permanent numbers table TVF is by far the best solution. The CPU is highest on the inline Numbers TVF. The inline numbers table is the most expensive because SQL Server has to do a lot of processing and calculation on the fly, whereas the permanent numbers table mostly has to read data, which means the IO will be much higher. Both XML methods perform much better than the inline numbers table, but are nearly twice as slow as the permanent numbers TVF because they require more CPU intensive processing, which is derived from SQL converting the data to XML and transforming the XML back to a relational format. It takes more processing power to encode and decode the XML than to simply convert it, so you should only use the encode/decode method, if your data contains special XML characters, http://msdn.microsoft.com/en-us/library/aa226544(SQL.80).aspx.

Winner of Round 1: Numbers Table Split Function

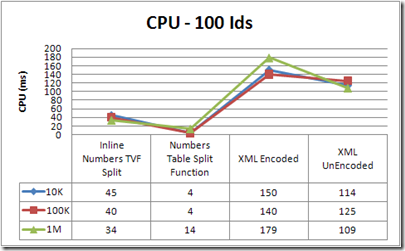

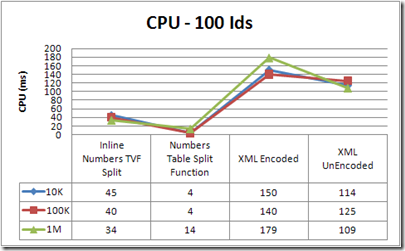

The next test was performed on a table with 10,000, 100,000 rows, 1,000,000 rows, using a string consisting of 100 Ids. If you look at the chart below, you will see a trend. As the number of Ids increases, so does the cost of the XML method. CPU usage actually increases at a exponential rate, which makes it the least scalable solution. Obviously the Numbers Table TVF is the clear winner here.

Winner of Round 2: Numbers Table Split Function

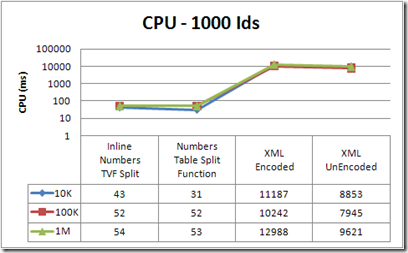

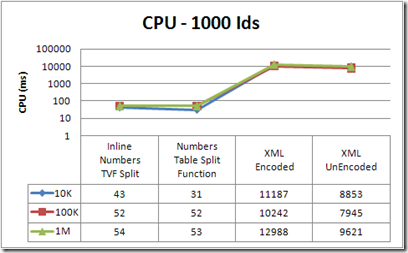

The final test was taken over the same load, but I supplied a string of 1000 Ids. As you can see with 1000, ids the results depict more of the same behavior. The big take away is the XML method should only be used when the number of values in the delimited string is relatively small.

Winner of Round 3: Numbers Table Split Function

The next counter I will be focusing on is Duration. Duration is not a very reliable counter, as it is very dependent on other processes running on the machine; however, it does provide insight to performance. The first test will be done over the same load. I will begin with 10 Ids again.

The results are a little more aligned in this test. The Numbers Table Split TVF is the best performing on average, followed by the XML methods. Again there is a higher performance cost to encode XML, so do so only when necessary. Duration does give you a general idea about performance, but these results definitely do not carry as much weight as the other counters.

Winner of Round 4: Numbers Table Split Function

The next step is to increase the number of Ids to 100. I wont repeat the same stuff over again I promise. This test yields more of the same.

Winner of Round 5: Numbers Table Split Function

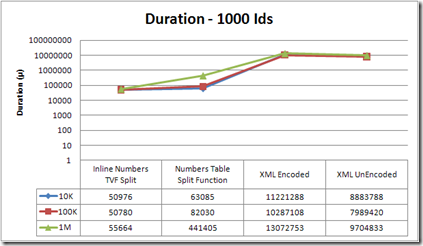

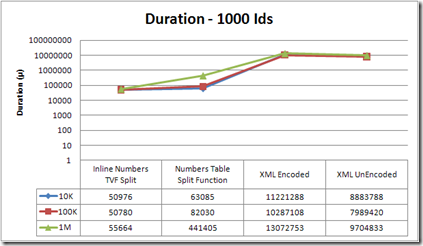

Next, I bump the number of Ids to 1000. Here we go….. a different result :) . In this example, the numbers table actually performed worse than the inline number TVF. You may be wondering why the duration is worse for the numbers table split function. I suspect the answer is the number of reads makes the query take longer to execute; however, there could have been something running on my machine when profiler captured the data. This is a prime example of why duration can be an unreliable counter. I cannot tell if the query is actually worse or if an environmental factor on my laptop may have skewed the timing. I will take the high road :^) and assume the reads impacted the timing because I had no known programs running. The XML results are just disturbing…… For as much as I recommend XML split solutions on the forums, these results are just scary.

Winner of Round 6: Inline Numbers TVF Split

The final counter I will be testing is reads. This is by far one of the most important counters because it impacts so many facets of SQL Server performance. Do not let the number of reads for the Numbers Table TVF persuade you to avoid it. A permanent numbers table TVF is going to have more reads. Essentially you are reading table values from cache/disk instead of calculating them, so the numbers of reads is greater. The obvious choice for this test is the inline numbers table TVF.

Winner of Round 7: Inline Numbers TVF Split

The next test increases the number of Ids to 100. As for the results, we see more of the same.

Winner of Round 8: Inline Numbers TVF Split

Finally, I will increase the number of Ids to 1000. Again more of the same. The number or reads, stays relatively consistent across all solutions. The XML solution does better than the numbers table TVF here, but it just does not scale well at any other level. I used to be a firm believer in the XML method, but I think I am going to start primarily recommending the number table TVF or an inline number table TVF.

Winner of Round 9: Inline Numbers TVF Split

The verdict

So what is the verdict? Well, again I cannot reiterate enough that no one solution is always better than another. Different solutions work best in different situations and environments. In my tests, there is a clear winner, the permanent numbers table TVF. Even though this is the “winner”, I have overwhelming evidence that says I should be using a numbers table to split a string, regardless of the numbers table being permanent or inline. I am happy with these results because the permanent numbers table split function performs well and is very easy to implement. Another benefit of the permanent numbers table TVF solution is that it works in all versions of SQL. Why would you not want to use the permanent numbers table? You may be willing to accept more CPU consumption to reduce IO, or perhaps you do not want to maintain another table. If this is the case, an inline numbers table solution is the way to go. All-in-all, the XML method really did surprise me and I find it sad that this method does not perform well. There is just not enough incentive to use an XML solution when an easier and better performing solution exists. Remember that you should test each method in your environment to see which works best in your environment.

I hope that you have learned something and can use this information to make more informed decisions when deciding to find a method to split delimited strings.

**** UPDATE *****

I did have some bugs in the code for the 1000 ids test. I guess my relaxed brain never came home from vacation. A special thanks goes out to Brad Schulz for spotting the bug. I am glad that the end result is for the most part the same. The only real deviation occurred in the reads category. The numbers of reads should be comparable for all methods because the same amount of data should be returned, but in my original result set they were not. I resolved the bug and have since updated the scripts and the results.

****UPDATE*****

A good friend and fellow SQL server enthusiast Brad Schulz recently posted a great entry, on parsing delimited strings with XML. His findings show how using the XML method in certain ways can cause the query to really bomb; however, you can avoid some performance penalties by casting and storing the delimited string in a XML data type, instead of casting and parsing the XML inline. I will not go into detail about why the inline XML is slower because I want you to read it right from the horse’s mouth, http://bradsruminations.blogspot.com/2009/12/delimited-string-tennis-anyone.html. When I changed my code to use a XML variable, the XML methods were just as performant as the numbers table methods. I do not know about you, but I am ecstatic. I for one love the XML method and am very excited to see that it is and can be just as performant, when used in the right context.

Download the script files: http://cid-6f041c9a994564d8.skydrive.live.com/self.aspx/.Public/Split%20Delimited%20String/BlogPost^_UnpackDelimitedString.zip