Today a colleague asked me a question about performance optimization regarding joins. I gave him a pretty detailed answer over the phone, but I do not think I really made my message stick, which is the reason for this post. The first and most important thing to remember is the optimizer can only choose one physical operator per table. The only exception to this is when the optimizer decides to use index intersection, http://www.sqlmag.com/Articles/ArticleID/94116/94116.html?Ad=1 (SQL 2005+). Index intersection occurs when the optimizer creates its own join and uses two indexes to satisfy the predicate. While index intersection is still aligned with what I said, index intersection does add an additional table reference to the query plan, which should be noted. If the same table is referenced multiple times, the execution plan will have more than than one physical operator at varying stages of the execution plan. When tables are joined together SQL Server creates a constrained Cartesian product, which is nothing more than matching rows based on a given join expression. To do this SQL Server uses a join (Merge, Hash or Nested Loop) and creates an INNER (Bottom) and OUTER (Top) set. I use the word set here because the INNER does not have to be a table. INNER can actually be a constrained Cartesian product. Essentially, the optimizer chooses a base (OUTER) set and will then filter the INNER set based on the results from the outer set. The optimizer then continues to filter the set for each join, until the query is satisfied. It is important to know that the optimizer is under no obligation to join tables in the order you have specified. The optimizer is free to rearrange joins as it sees fit. Let’s start by creating our sample objects.

USE [tempdb] GO SET NOCOUNT ON; GO IF OBJECT_ID('tempdb.dbo.State') IS NOT NULL BEGIN DROP TABLE dbo.[State]; END GO CREATE TABLE dbo.[State]( State_Cd CHAR(2), Descr VARCHAR(150) ); GO INSERT INTO dbo.[State] ([State_Cd],[Descr]) VALUES ('AL','Alabama'); INSERT INTO dbo.[State] ([State_Cd],[Descr]) VALUES ('LA','Louisiana'); GO IF OBJECT_ID('tempdb.dbo.City') IS NOT NULL BEGIN DROP TABLE dbo.[City]; END GO CREATE TABLE dbo.[City]( State_Cd CHAR(2), City_Cd VARCHAR(100) ); GO INSERT INTO dbo.[City] ([State_Cd],[City_Cd]) VALUES ('AL','Mobile'); INSERT INTO dbo.[City] ([State_Cd],[City_Cd]) VALUES ('LA','New Orleans'); INSERT INTO dbo.[City] ([State_Cd],[City_Cd]) VALUES ('LA','Luling'); GO IF OBJECT_ID('tempdb.dbo.Zip') IS NOT NULL BEGIN DROP TABLE dbo.[Zip]; END GO CREATE TABLE dbo.Zip( City_Cd VARCHAR(100), Zip_Cd VARCHAR(10), ); GO INSERT INTO dbo.Zip ([City_Cd],[Zip_Cd]) VALUES ('Mobile','36601'); INSERT INTO dbo.Zip ([City_Cd],[Zip_Cd]) VALUES ('New Orleans','70121'); INSERT INTO dbo.Zip ([City_Cd],[Zip_Cd]) VALUES ('Luling','70070'); GO

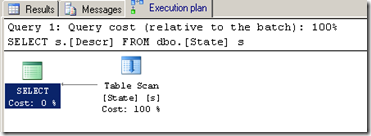

Now that we have our tables, lets execute a very simplistic query.

Note: There are no indexes present on any tables, so all queries will result in a table scan.

--Qry 1 SELECT s.[Descr] FROM dbo.[State] s

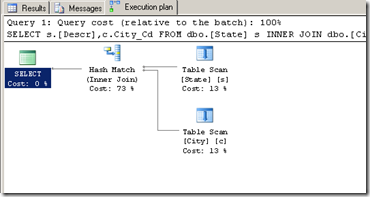

Now let’s add a second table to the query to see what happens. What we should see is a nested loop join and two table scans.

--Qry 2 SELECT s.[Descr],c.City_Cd FROM dbo.[State] s INNER JOIN dbo.[City] c ON s.[State_Cd] = c.[State_Cd]

The screenshot above shows the State table has been chosen as the base query. As you can see, each table is represented by a single Table scan operator. Let’s add one more table to the mix to see how the optimizer will react.

--Qry 3 SELECT s.[Descr],c.City_Cd,z.Zip_Cd FROM dbo.[State] s INNER JOIN dbo.[City] c ON s.[State_Cd] = c.[State_Cd] INNER JOIN dbo.[Zip] z ON z.[City_Cd] = c.[City_Cd]

The optimizer decided to make the first OUTER table State and then decided to make the INNER a constrained Cartesian product of City and Zip . This is especially important when you are optimizing code because it is a lot easier to fix problems when you know how indexes and joins really work. For this example, I see that we are scanning Zip and City, so that tells me that I am missing an index on these two tables. Remember the general rule of thumb is to have indexes on all column participating in the join expression. I will start the optimization process by adding an index to the Zip table, on the City_Cd column.

CREATE NONCLUSTERED INDEX ncl_idx_Zip_City_Cd ON dbo.[Zip](City_Cd);

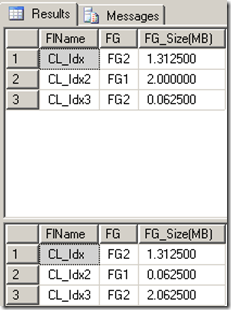

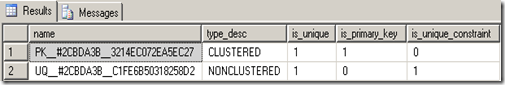

Wow, look at how the query plan changed. We now see our index seek on Zip, but we now see a RID lookup. Lookups can become performance bottlenecks really quickly and can sometimes cause blocking or even worse dead locks. Key Lookups can cause blocking and deadlocks because the optimizer has to take a shared lock on the Clustered Index to get the data that is missing from the nonclustered index and this causes a problem when an insert/update/delete occurs because it requires an exclusive lock, on the Clustered Index. In our example, We have an index on Zip which only contains the column City_Cd; however, we are selecting Zip_Cd. Because Zip_Cd does not exist in the index, the optimizer has to go back to the heap to get the remaining column data. To solve this problem we need to add Zip_Cd to the index. I will be adding the Zip_Cd column via the INCLUDE clause. I chose INCLUDE because I am not using this column in the predicate and using the INCLUDE clause keeps the index relatively small because the value is only stored at the leaf level of the nonclustered index. You may want to add the column to the index key if you use the column in a lot of predicates because SQL Server maintains statistics on index key columns, but not columns in the INCLUDE clause. Statistics are used used by SQL Server to estimate cardinality. Better cardinality estimates allow the optimizer to make better decisions about what operators are best for the given query. Essentially, better cardinality estimates can be the difference between a scan and a seek. You have to weigh the cost of index maintenance and performance when deciding which method to choose. For more information regarding INCLUDE please visit this link, http://msdn.microsoft.com/en-us/library/ms190806.aspx.

Note: If you are not sure what columns need to be added to the index you can hover your mouse over the lookup and look at the output list. The index that you need to add the columns too will always be the seek operator to the right of the lookup operator.

IF EXISTS(SELECT 1 FROM sys.indexes WHERE name = 'ncl_idx_Zip_City_Cd') BEGIN DROP INDEX ncl_idx_Zip_City_Cd ON dbo.Zip; END GO CREATE NONCLUSTERED INDEX ncl_idx_Zip_City_Cd ON dbo.[Zip](City_Cd)INCLUDE([Zip_Cd]); GO

Now Execute the query again.

--Qry 3 SELECT s.[Descr],c.City_Cd,z.Zip_Cd FROM dbo.[State] s INNER JOIN dbo.[City] c ON s.[State_Cd] = c.[State_Cd] INNER JOIN dbo.[Zip] z ON z.[City_Cd] = c.[City_Cd]

Now that is a little better but lets make this query even faster. Next, I will add an index to the City table, making sure to include City_Cd.

CREATE NONCLUSTERED INDEX ncl_idx_City_State_Cd ON dbo.City(State_Cd)INCLUDE([City_Cd]); GO

Now execute the query again.

--Qry 3 SELECT s.[Descr],c.City_Cd,z.Zip_Cd FROM dbo.[State] s INNER JOIN dbo.[City] c ON s.[State_Cd] = c.[State_Cd] INNER JOIN dbo.[Zip] z ON z.[City_Cd] = c.[City_Cd]

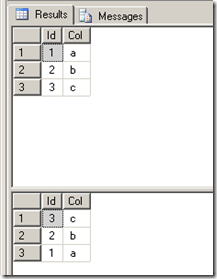

How about them apples? By adding the proper indexes in place we can now get index seeks across the board. It is important to note that we cannot seek State because we have no predicate filter on any columns in the state table, so the optimizer has to scan. When you are trying to optimize queries the first place to look is the execution plan. If you see a lot of scans, you have a lot of optimization potential. Remember that you want to make sure all columns in the select, the join and the where clause are present in your index. Please do not tell your boss that you need to create indexes to cover every query in your environment. You will not be able to fully cover every query in your environment, but the important thing is to optimize and cover the queries that are really expensive or causing problems. Who knows you maybe able to cover multiple queries by creating or modifying a single index.

That’s it for now. I hope I have cleared up how the optimizer handles joins and given you greater insight on how to optimize joins. Until next time happy coding.