This week I decided I wanted to take a step back from my performance tuning series and present material that DBAs and developers may not know. I will be focusing on SQL Server Management Studio (SSMS) tips and tricks.

Keyboard Shortcuts:

SQL Server Management Studio allows keyboard shortcuts that can help you save time and increase efficiency. Keyboard shortcuts allow you to execute TSQL commands at the push of a button. The best part about keyboard shortcuts is the shortcut can be used to pass highlighted text as parameters. I will start with some of the built-in keyboard shortcuts.

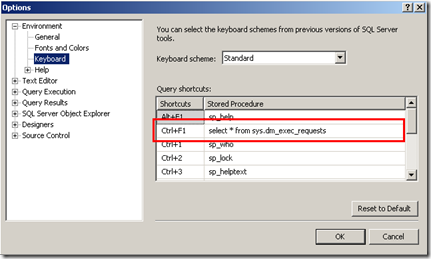

To open the keyboard shortcuts menu, click Tools –> Options –> Expand Environment –> Click Keyboard. Now you will note that keyboard shortcuts are loaded by default. Some of the defaults are sp_help (Alt+F1), sp_who (Ctrl+2) etc… You can implement just about any code you want in the keyboard shortcut. For example, you can put the query “select * from sys.dm_exec_requests” directly into the text box. The beauty of this is you can open a new query window and just hit the shortcut keys to execute the query. This saves time and makes life a bit easier. Below is a screenshot of my shortcut list, including the query I posted above.

Well that is all good and nice but what else can shortcuts do. The best part about shortcuts in my opinion is they can actually be used to execute stored procedures and supply highlighted values as parameters.

Lets run sp_help but we will never type the text sp_help. Open a new query window and create a new table.

CREATE TABLE dbo.MyTable(ID INT);

Now type MyTable in the query window and highlight the text. Once the text is highlighted, hold Alt and press F1. You should see the results of sp_help displayed for the highlighted object, “MyTable.”

Note: If you need to specify an object in a different schema, you have to use the two part name “schema.Object” and put it in single quotes. For example, ‘dbo.MyTable’.

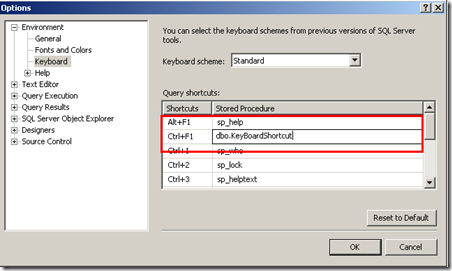

Now that is pretty cool right? What I will show you next is even cooler! I will execute a user defined stored procedure with parameters Open the keyboard shortcut menu and assign the below stored procedure to a shortcut key.

Open a new query window and create the below stored procedure.

CREATE PROCEDURE dbo.KeyBoardShortcut(@Id INT,@Col CHAR(1)) AS BEGIN SELECT @Id,@Col END GO

Now type the text below and highlight it. Once highlighted, hit Ctrl+F1. (Note: If you assigned the procedure to a different shortcut, you will need to use that shortcut)

1,'a'

As you can see, the stored procedure was executed with our highlighted parameters! This is a awesome feature that can save a lot of time, especially when you have custom code that you need to access frequently. This increases efficiency and puts more information at your fingertips. One of my favorite shortcut keys is sp_helptext. You can use create a shortcut for sp_helptext and highlight any procedure, function, view, trigger, or stored procedure and get the create script of that object.

Listing All Table Columns

This is a request that I see very often. The request usually revolves around developers who have a lot of columns in their table and have to select from most of them. As you can imagine, typing each column name can be a very tedious process. There are two methods that I use to accomplish this task, when I am feeling a little lazy…… BUT the key is to work smarter not harder right :^) !

The first method is to right-click the table click script table as –> SELECT To –> New Query Window. Voila we now have a select query that lists all the columns in the table. You can perform the same steps to get a list of all columns in a view.

The next method is to expand the table –> Drag the columns folder into the query window. This will generate a column delimited list of the column names.

Scripting Multiple Objects

I am sure many of you have tried to script multiple objects from Management Studio, but have had little luck. Now, we could choose to right-click the database –> tasks –> generate scripts, but where is the fun it that? This is an easier way that does not make you jump through hoops or follow a wizard. The trick is to click on the folder containing the objects you want to script and then look at the Object Explorer Details pane. Inside the Object Explorer Details pane you can Ctrl or Shift click multiple objects. Once selected, you can right-click –> Script <Object Type> AS –> Create –> To New Window.

Creating a TSQL Toolkit

One of the most underrated or unknown features of SQL Server Management Studio is Template Explorer. You may be thinking that template explorer is used specifically for TSQL templates, but I will show you a couple of ways to make Template Explorer function as a TSQL Toolkit. Click CTRL+ALT+T or go to view –> Template Explorer to open the window.

Now this does not look that impressive from the get, but trust me it can and does get better. Right-click SQL Server Templates and choose New –> then click folder. Give the folder a name. I will be using the name Test_Scripts. Next create a new template in the Test_Scripts folder. I named the new template sys.dm_exec_requests. Your template explorer should now look like this.

Okay, well that is great…… How does this help me? Well lets add some TSQL code to that new template. Once we add code to the template, We can then drag the template into any query window and SSMS will automatically post the code into the open query window. Basically, we can use Template Explorer as our personal Toolkit. We can create any folder structure we want and SSMS does a great job of keeping all our scripts organized. Let’s see this in action.

Right-click the sys.dm_exec_requests template and choose edit. Paste the code below and click save.

SELECT * FROM sys.dm_exec_requests

Open a new query window and drag the sys.dm_exec_requests template into the query window. Voila!!!!!!! the TSQL is automatically scripted into our new query window. As you can see, we can use the template explorer to save our scripts and make them easily accessible. This alleviates our need to open windows explorer or browse the file system for our scripts. Plus al the scripts are saved and managed in one place. If you need to copy your scripts out of template explorer, the actually already exist on the file system. The directory will be within your documents. On my SQL Server 2008 instance, my templates are stored here, C:\Documents and Settings\ahaines\Application Data\Microsoft\Microsoft SQL Server\100\Tools\Shell\Templates\Sql. I don’t know about you, but I for one love this feature. This gives me the ability to quickly get data, without having to leave SSMS, plus I do not have to waste time searching the file system. Give it a try and see what you think.

That is all the tips I have for now. I hope that you have learned something new or any of these tips can help you. Until next time, happy coding.